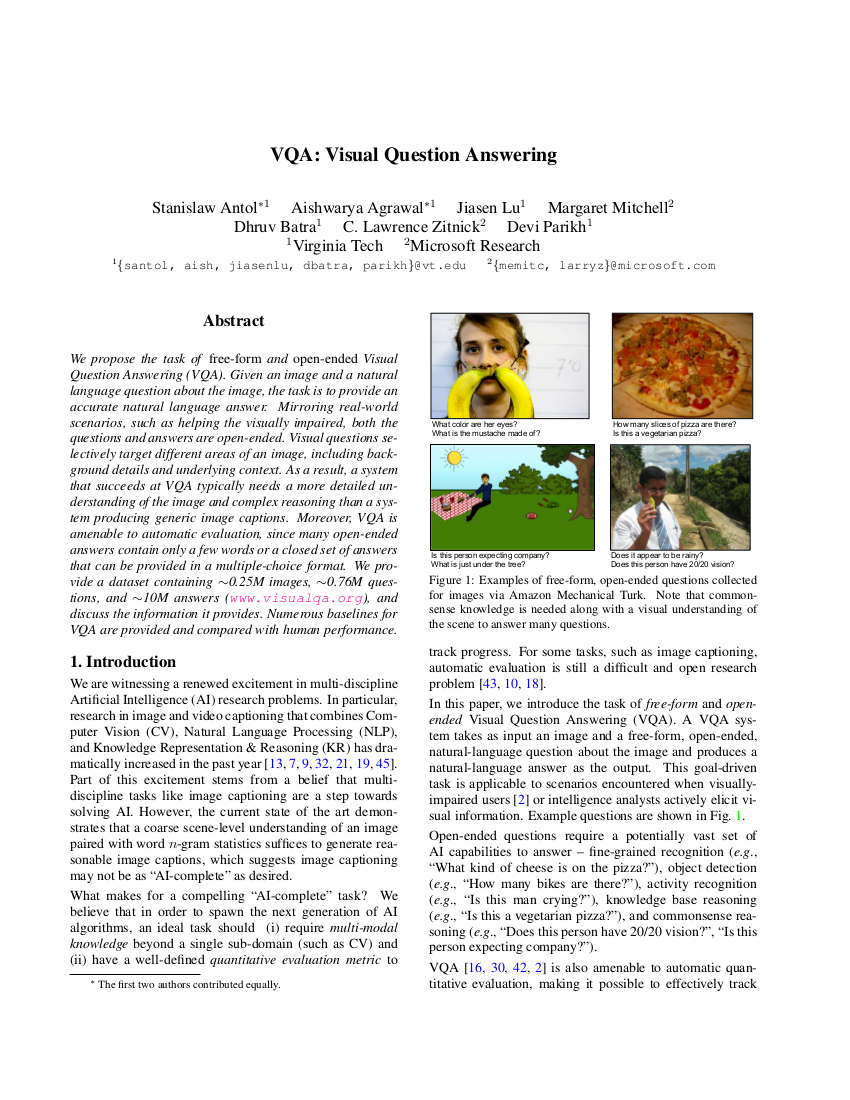

What is VQA?

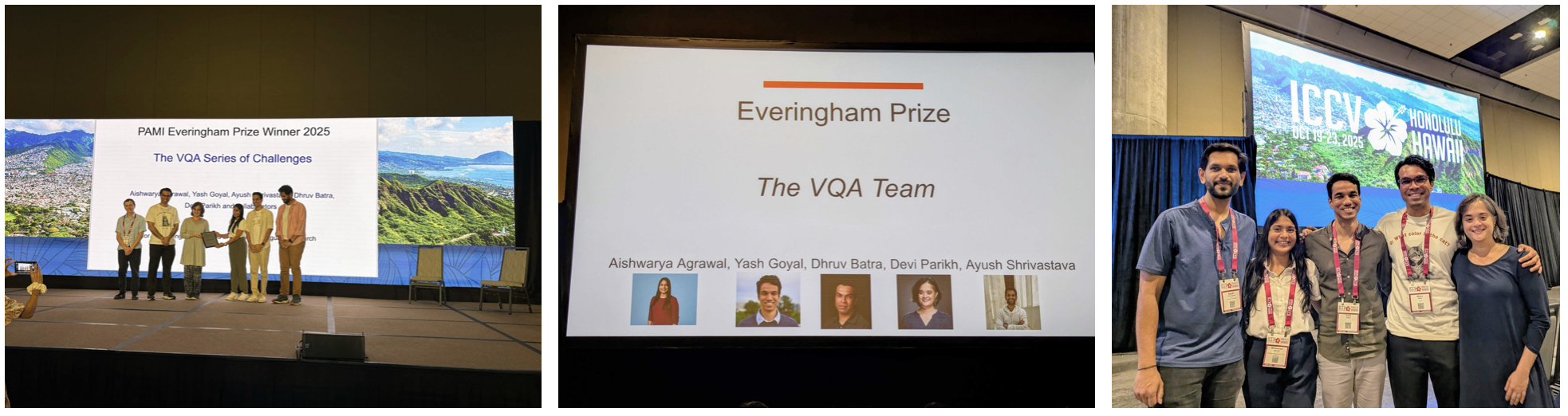

VQA is a new dataset containing open-ended questions about images. These questions require an understanding of vision, language and commonsense knowledge to answer.

- 265,016 images (COCO and abstract scenes)

- At least 3 questions (5.4 questions on average) per image

- 10 ground truth answers per question

- 3 plausible (but likely incorrect) answers per question

- Automatic evaluation metric

Subscribe to our group for updates!

Dataset

Details on downloading the latest dataset may be found on the download webpage.

-

April 2017: Full release (v2.0)

- 204,721 COCO images

(all of current train/val/test) - 1,105,904 questions

- 11,059,040 ground truth answers

- ⊕

July 2015: Beta v0.9 release

- ⊕

June 2015: Beta v0.1 release

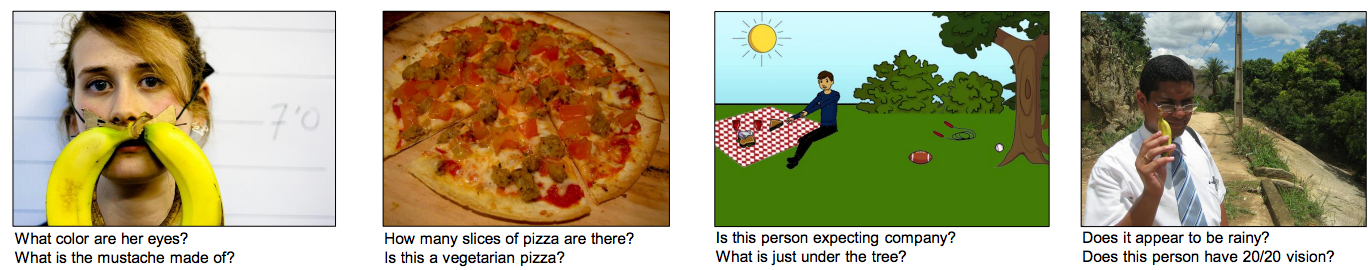

Award

2025 Mark Everingham Prize

for contributions to the Computer Vision community, awarded at ICCV 2025 to

The VQA Series of Challenges

Aishwarya Agrawal, Yash Goyal, Ayush Shrivastava, Dhruv Batra, Devi Parikh and contributers

For stimulating a new strand of vision and language research

Thanks to all the contributors and collaborators (in alphabetical order):

Peter Anderson, Stanislaw Antol, Arjun Chandrasekaran, Prithvijit Chattopadhyay, Xinlei Chen, Abhishek Das, Karan Desai, Sashank Gondala, Khushi Gupta, Drew Hudson, Rishabh Jain, Yash Kant, Tejas Khot, Satwik Kottur, Stefan Lee, Jiasen Lu, Margaret Mitchell, Nirbhay Modhe, Akrit Mohapatra, José M. F. Moura, Vishvak Murahari, Vivek Natarajan, Viraj Prabhu, Marcus Rohrbach, Meet Shah, Amanpreet Singh, Avi Singh, Douglas Summers-Stay, Deshraj Yadav, Peng Zhang, Larry Zitnick.

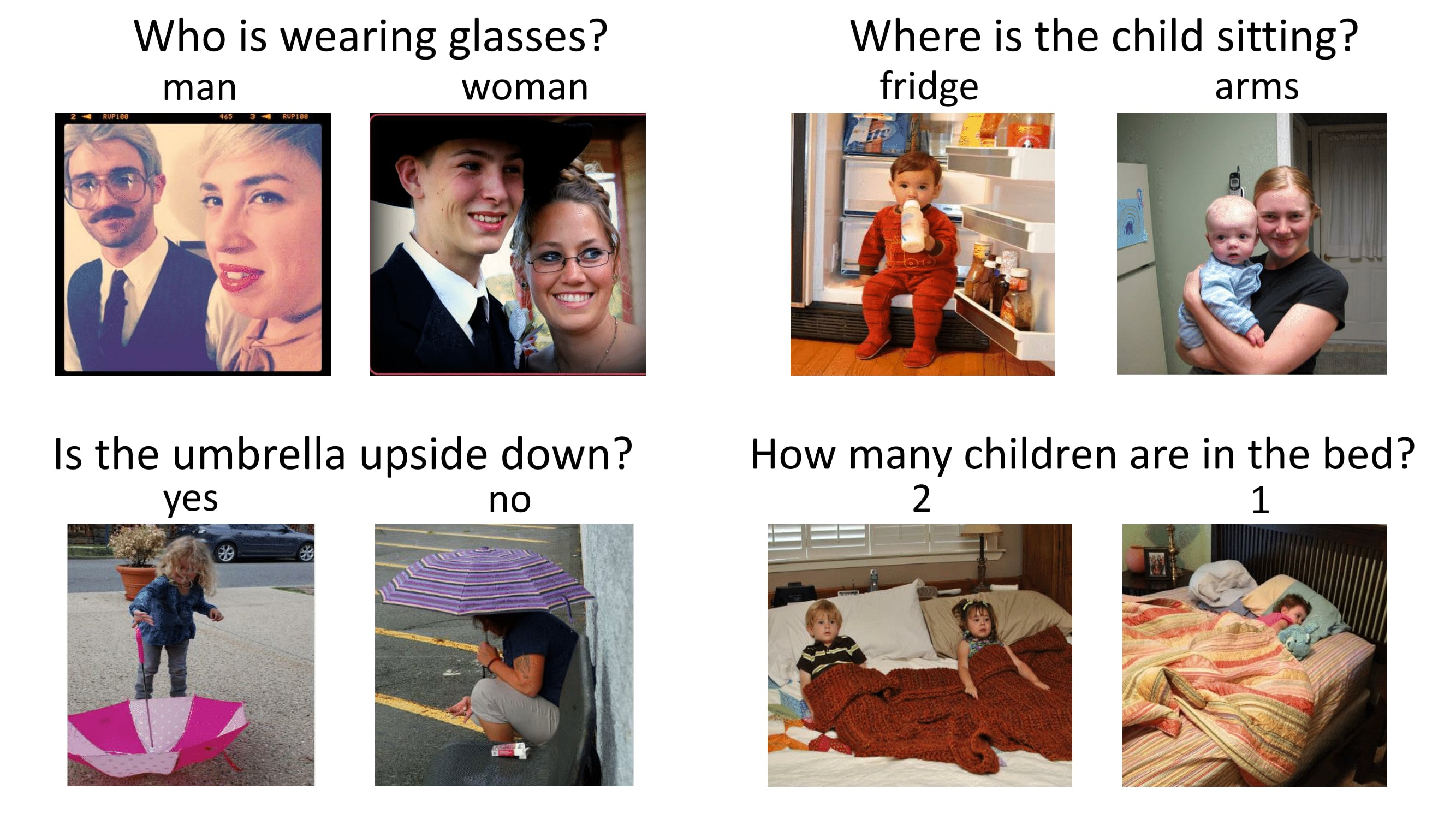

Papers

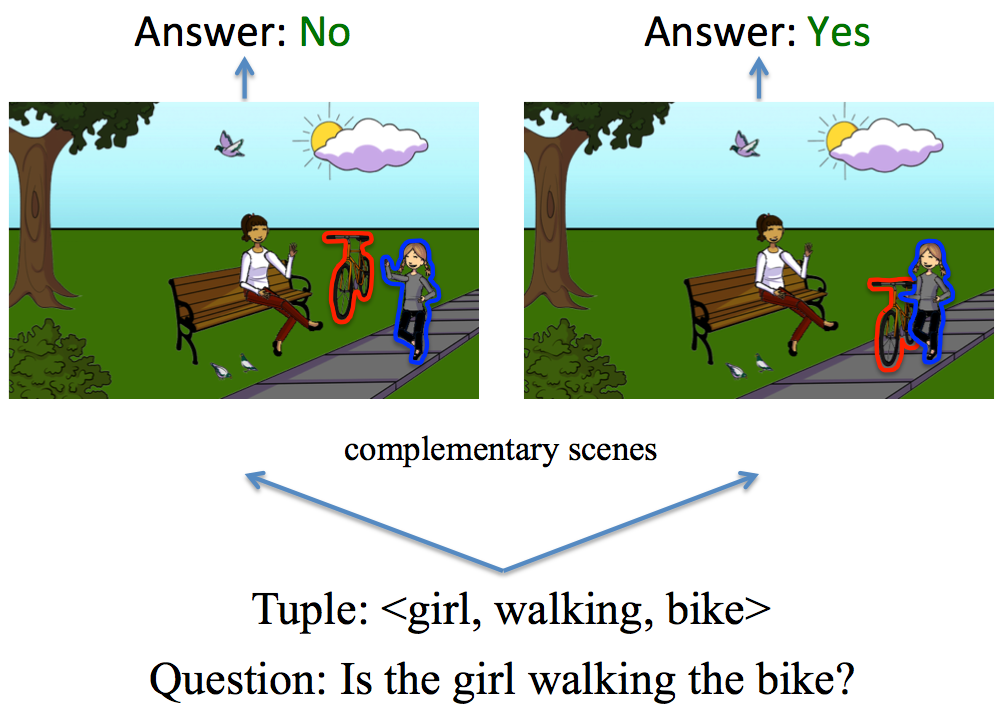

Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering (CVPR 2017)

Videos

Feedback

Any feedback is very welcome! Please send it to visualqa@gmail.com.