|

|

|

Welcome |

|

|

|

A Simple Baseline for Visual Question Answering

Invited Talk: Yuandong Tian (Facebook AI Research) [Video] |

|

|

|

See While You Say: Generating Human-like Language from Visual Input

Invited Talk: Margaret Mitchell (Microsoft Research) [Video] |

|

|

|

Consistent questions in Visual Madlibs, and detecting objects for visual question answering

Invited Talk: Alex Berg (UNC Chapel Hill) |

|

|

|

Morning Break |

|

|

|

Embodied Cognition: Linking vision, motor control and language

Invited Talk: Jitendra Malik (UC Berkeley) [Video] |

|

|

|

Overview of challenge, winner announcements, analysis of results

Aishwarya Agrawal [Slides][Video] |

|

|

|

Challenge Winner Talk (Abstract Scenes)

Andrew Shin*, Kuniaki Saito*, Yoshitaka Ushiku and Tatsuya Harada [Slides][Video} |

|

|

|

Honorable Mention Spotlight (Real Images)

Aaditya Prakash [Slides][Video] |

|

|

|

Challenge Runner-Up Talk (Real Images)

Hyeonseob Nam and Jeonghee Kim [Video] |

|

|

|

Challenge Winner Talk (Real Images)

Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell and Marcus Rohrbach [Slides][Video] |

|

|

|

Lunch (On your own) |

|

|

|

Towards vision and language aids for the blind

Invited Talk: Kevin Murphy (Google Research) [Video] |

|

|

|

Recent Advances in Visual Question Learning

Trevor Darrell (UC Berkeley) [Video] |

|

|

|

Poster session and Afternoon break |

|

|

|

VQA Divide and Conquer

Ali Farhadi (University of Washington) [Video] |

|

|

|

Invited Talk

Mario Fritz (Max-Planck-Institut für Informatik) |

|

|

|

Panel: Future Directions |

|

|

|

Closing Remarks

[Slides] |

VQA Challenge Workshop

at CVPR 2016, June 26, Las Vegas, USA

Home Program SubmissionAccepted Abstracts

The VQA Challenge Winners and Honorable Mentions were announced at the VQA Challenge Workshop where they were awarded TitanX GPUs sponsored by NVIDIA!

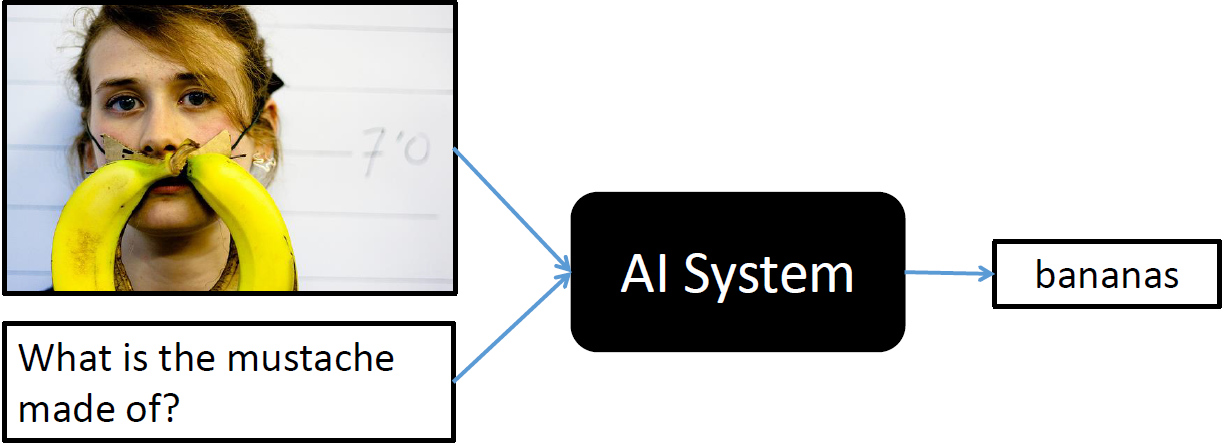

Introduction

The primary purpose of this workshop is to hold a challenge on Visual Question Answering on the VQA dataset. VQA is a new dataset containing open-ended and multiple-choice questions about images. These questions require an understanding of vision, language, and commonsense knowledge to answer. This workshop will provide an opportunity to benchmark algorithms on the VQA dataset and to identify state-of-the-art algorithms.

A secondary goal of this workshop is to bring together researchers interested in Visual Question Answering to share state-of-the-art approaches, best practices, and perspectives on future directions in multi-modal AI. We invite submissions of extended abstracts of at most 2 pages describing work in areas such as: Visual Question Answering, (Textual) Question Answering, Commonsense Knowledge, Video Question Answering, Image/Video Captioning and other problems at the intersection of vision and language. Accepted abstracts will be presented as posters at the workshop. The workshop will be held on June 26th, 2016 at the IEEE Conference on Computer Vision and Pattern Recognition, 2016.

Invited Speakers

Alex Berg

UNC Chapel Hill

Trevor Darrell

UC Berkeley

Ali Farhadi

University of Washington

Mario Fritz

Max-Planck-Institut für Informatik

Jitendra Malik

UC Berkeley

Margaret Mitchell

Microsoft Research

Kevin Murphy

Google Research

Yuandong Tian

Facebook AI Research

Program (Venue: Palace III)

Poster Presentation Instructions

1. Poster stands will be 8 feet wide by 4 feet high. Please review the CVPR16 poster template for more details on how to prepare your poster. You do not need to use this template, but please read the instructions carefully and prepare your posters accordingly.

2. Poster presenters are asked to install their posters between 12:25 PM and 2:00 PM. Push pins will be provided for attaching posters to the boards.

Submission Instructions

We invite submissions of extended abstracts of at most 2 pages describing work in areas such as: Visual Question Answering, (Textual) Question Answering, Commonsense Knowledge, Video Question Answering, Image/Video Captioning and other problems at the intersection of vision and language. Accepted submissions will be presented as posters at the workshop. The extended abstract should follow the CVPR formatting guidelines and be emailed as single PDF to the email id mentioned below. Please use the following LaTeX/Word templates.

Dual Submissions

We encourage submissions of relevant work that has been previously published, or is to be presented at the main conference. The accepted abstracts will be posted on the workshop website and will not appear in the official IEEE proceedings.

Dates

May 08, 2016 Extended

Submission Deadline

May 15, 2016

Decision to Authors

In case you need a decision before the CVPR early registration deadline (May 15), please let us know.

Organizers

Webmaster

Akrit Mohapatra

Virginia Tech

Sponsors

Contact: visualqa@gmail.com