Welcome to the VQA Challenge 2017!

Overview Challenge Guidelines EvalAI: A New Evaluation Platform! Leaderboard

Papers reporting results on the VQA v2.0 dataset should --

1) Report test-standard accuracies, which can be calculated using either of the non-test-dev phases, i.e., "test2017" or "Challenge test2017" at oe-real-2017.

2) Compare their test-standard accuracies with those on the test2017 leaderboard [oe-real-leaderboard-2017].

Overview

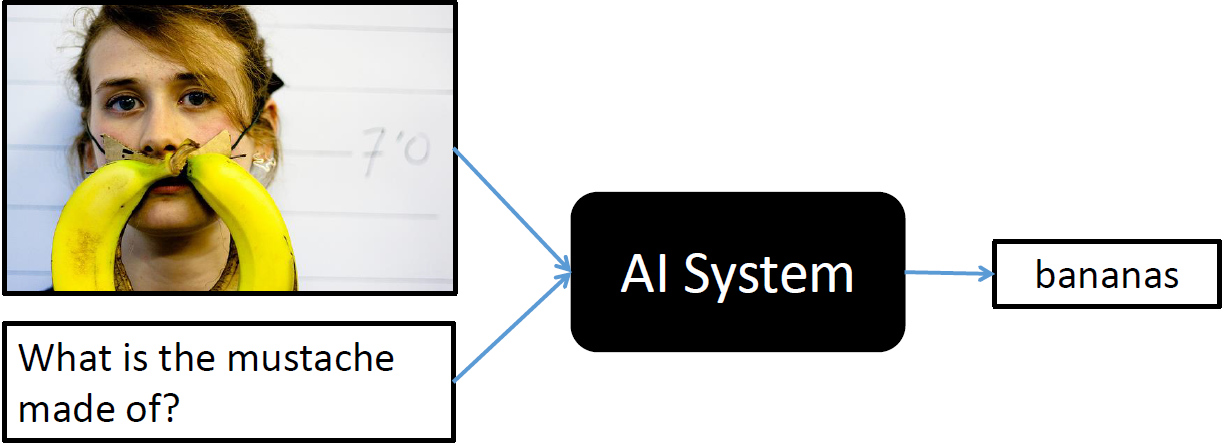

We are pleased to announce the Visual Question Answering (VQA) Challenge 2017. Given an image and a natural language question about the image, the task is to provide an accurate natural language answer. Visual questions selectively target different areas of an image, including background details and underlying context.

Teams are encouraged to compete in the following VQA challenge:

- Open-Ended for balanced real images: Submission and Leaderboard

The VQA v2.0 train, validation and test sets, containing more than 250K images and 1.1M questions, are available on the download page. All questions are annotated with 10 concise, open-ended answers each. Annotations on the training and validation sets are publicly available.

VQA Challenge 2017 is the second edition of the VQA Challenge. VQA Challenge 2016 was organized last year, and the results were announced at VQA Challenge Workshop, CVPR 2016. More details about VQA Challenge 2016 can be found here.

Answers to some common questions about the challenge can be found in the FAQ section.

Dates

March 9, 2017 Version 2.0 of the VQA train and val data released

April 26, 2017 Version 2.0 of the VQA test data released and test evaluation servers running

June 30, 2017 Extended Submission deadline at 23:59:59 UTC

After the challenge deadline, all challenge participant results on test-standard split will be made public on a test-standard leaderboard.

Challenge Guidelines

Following COCO, we have divided the test set for balanced real images into a number of splits, including test-dev, test-standard, test-challenge, and test-reserve, to limit overfitting while giving researchers more flexibility to test their system. Test-dev is used for debugging and validation experiments and allows for maximum 10 submissions per day. Test-standard is the default test data for the VQA competition. When comparing to the state of the art (e.g., in papers), results should be reported on test-standard. Test-standard is also used to maintain a public leaderboard that is updated upon submission. Test-reserve is used to protect against possible overfitting. If there are substantial differences between a method's scores on test-standard and test-reserve, this will raise a red-flag and prompt further investigation. Results on test-reserve will not be publicly revealed. Finally, test-challenge is used to determine the winners of the challenge.

The evaluation page lists detailed information regarding how submissions will be scored. The evaluation servers are open. This year we are hosting the evaluation servers on a new Evaluation Platform called EvalAI, developed by the CloudCV team. EvalAI is an open-source web platform designed for organizing and participating in challenges to push the state of the art on AI tasks. Click here to know more about EvalAI. We encourage people to first submit to "Real test-dev2017 (oe)" phase to make sure that you understand the submission procedure, as it is identical to the full test set submission procedure. Note that the "Real test-dev2017 (oe)" and "Real Challenge test2017 (oe)" evaluation servers do not have a public leaderboard..

To enter the competition, first you need to create an account on EvalAI. We allow people to enter our challenge either privately or publicly. Any submissions to the "Real Challenge test2017 (oe)" phase will be considered to be participating in the challenge. For submissions to the "Real test2017 (oe)" phase, only ones that were submitted before the challenge deadline and posted to the public leaderboard will be considered to be participating in the challenge.

Before uploading your results to EvalAI, you will need to create a JSON file containing your results in the correct format as described on the evaluation page.

To submit your JSON file to the VQA evaluation servers, click on the “Submit” tab on the VQA Real Image Challenge (Open-Ended) 2017 page on EvalAI. Select the phase ("Real test-dev2017 (oe)" or "Real test2017 (oe)" or "Real Challenge test2017 (oe)"). Please select the JSON file to upload and fill in the required fields such as "method name" and "method description" and click “Submit”. After the file is uploaded the evaluation server will begin processing. To view the status of your submission please go to “My Submissions” tab and choose the phase to the results file was uploaded. Please be patient, the evaluation may take quite some time to complete (~1min on test-dev and ~3min on the full test set). If the status of your submission is “Failed” please check your "Stderr File" for the corresponding submission.

After evaluation is complete and the server shows a status of “Finished”, you will have the option to download your evaluation results by selecting “Result File” for the corresponding submission. The "Result File" will contain the aggregated accuracy on the corresponding test-split (test-dev split for "Real test-dev2017 (oe)" phase, test-standard and test-dev splits for both "Real test2017 (oe)" and "Real Challenge test2017 (oe)" phases). If you want your submission to appear on the public leaderboard, check the box under "Show on Leaderboard" for the corresponding submission.

Please limit the number of entries to the challenge evaluation server to a reasonable number, e.g., one entry per paper. To avoid overfitting, the number of submissions per user is limited to 1 upload per day and a maximum of 5 submissions per user. It is not acceptable to create multiple accounts for a single project to circumvent this limit. The exception to this is if a group publishes two papers describing unrelated methods, in this case both sets of results can be submitted for evaluation. However, test-dev allows for 10 submissions per day. Please refer to the section on "Test-Dev Best Practices" in the MSCOCO detection challenge page for more information about the test-dev set.

The download page contains links to all VQA v2.0 train/val/test images, questions, and associated annotations (for train/val only). Please specify any and all external data used for training in the "method description" when uploading results to the evaluation server.

Results must be submitted to the evaluation server by the challenge deadline. Competitors' algorithms will be evaluated according to the rules described on the evaluation page. Challenge participants with the most successful and innovative methods will be invited to present.

Tools and Instructions

We provide API support for the VQA annotations and evaluation code. To download the VQA API, please visit our GitHub repository. For an overview of how to use the API, please visit the download page and consult the section entitled VQA API. To obtain API support for COCO images, please visit the COCO download page. To obtain API support for abstract scenes, please visit the GitHub repository.

For challenge related questions, please contact visualqa@gmail.com. In case of technical questions related to EvalAI, please post on EvalAI's mailing list.

Frequently Asked Questions (FAQ)

As a reminder, any submissions before the challenge deadline whose results are made publicly visible on the "Real test2017 (oe)" leaderboard or are submitted to the "Real Challenge test2017 (oe)" phase will be enrolled in the challenge. For further clarity, we answer some common questions below:

- Q: What do I do if I want to make my test-standard results public and participate in the challenge? A: Making your results public (i.e., visible on the leaderboard) on the "Real test2017 (oe)" phase implies that you are participating in the challenge.

- Q: What do I do if I want to make my test-standard results public, but I do not want to participate in the challenge? A: We do not allow for this option.

- Q: What do I do if I want to participate in the challenge, but I do not want to make my test-standard results public yet? A: Submitting to the "Real Challenge test2017 (oe)" phase was created for this scenario.

- Q: When will I find out my test-challenge accuracies? A: We will reveal challenge results some time after the deadline. Results will first be announced at our CVPR VQA Challenge workshop 2017.

- Q: Can I participate from more than one EvalAI team in the VQA challenge? A: No, you are allowed to participate from one team only.

- Q: Can I add other members to my EvalAI team? A: Yes.

- Q: Is the daily/overall submission limit for a user or for a team? A: It is for a team.

Organizers

EvalAI: A New Evaluation Platform!

We are hosting VQA Challenge 2017 on EvalAI. EvalAI is an open-source web platform that aims to help researchers, students and data scientists create, collaborate and participate in various AI challenges. To overcome the increasing difficulties related to slower execution, flexibility and portability of similar platforms in benchmarking algorithms solving various AI tasks, EvalAI was started as an open-source initiative by the CloudCV team. By providing swift and robust backends based on map-reduce frameworks that speed up evaluation on the fly, EvalAI aims to make it easier for researchers to reproduce results from technical papers and perform reliable and accurate analyses. On the front-end, these are represented via active central leaderboards and dynamic submission interfaces that abstract the need for users to write time-consuming evaluation scripts on their end to single-click uploads. For more details, visit https://evalai.cloudcv.org.

If you are interested in contributing to EvalAI to make a bigger impact on the AI research community, please visit EvalAI's Github Repository.