|

|

|

Welcome

Devi Parikh (Georgia Tech / Facebook AI Research) [Slides] [Video] |

|

|

|

Invited Talk

Alex Schwing (University of Illinois at Urbana-Champaign) [Video] |

|

|

|

Invited Talk

Lisa Hendricks (University of California, Berkeley) [Slides] [Video] |

|

|

|

VQA Challenge Talk (Overview, Analysis and Winner Announcement)

Ayush Shrivastava (Georgia Tech) [Slides] [Video] |

|

|

|

VQA Challenge Runner-up Talk

Team: MSM@MSRA Members: Bei Liu, Zhicheng Huang, Zhaoyang Zeng, Zheyu Chen and Jianlong Fu [Slides] [Video] |

|

|

|

VQA Challenge Winner Talk

Team: MIL@HDU Members: Zhou Yu, Jun Yu, Yuhao Cui and Jing Li [Slides] [Video] |

|

|

|

Morning Break |

|

|

|

Invited Talk

Christopher Manning (Stanford University) [Slides] [Video] |

|

|

|

GQA Challenge Talk (Overview, Analysis and Winner Announcement)

Drew Hudson (Stanford University) [Slides] [Video] |

|

|

|

GQA Challenge Winner Talk

Team: Kakao Brain Members: Eun-Sol Kim, Yu-Jung Heo and Woo-Young Kang [Slides] [Video] |

|

|

|

TextVQA Challenge Talk (Overview, Analysis and Winner Announcement)

Amanpreet Singh (Facebook AI Research) [Slides] [Video] |

|

|

|

TextVQA Challenge Runner-up Talk

Team: Team-Schwail Members: Harsh Agrawal, Jyoti Aneja, Maghav Kumar and Alex Schwing [Slides] [Video] |

|

|

|

TextVQA Challenge Winner Talk

Team: DCD_ZJU Members: Yuetan Lin, Hongrui Zhao, Yanan Li and Donghui Wang [Slides] [Video] |

|

|

|

Lunch (On your own) |

|

|

|

Invited Talk

Karl Moritz Hermann (Google DeepMind) [Slides] [Video] |

|

|

|

Invited Talk

Layla El Asri (Microsoft Research) [Slides] [Video] |

|

|

|

Visual Dialog Challenge Talk (Overview, Analysis and Winner Announcement)

Abhishek Das (Georgia Tech) [Slides] [Video] |

|

|

Alibaba DAMO Academy

Alibaba DAMO Academy

|

Visual Dialog Challenge Winner Talk

Team: MReaL - BDAI Members: Jiaxin Qi, Yulei Niu, Hanwang Zhang, Jianqiang Huang, Xian-Sheng Hua and Ji-Rong Wen [Slides] [Video] |

|

|

|

Poster session and Afternoon break

Location: Pacific Arena Ballroom Allotted Poster Boards: #168 to #207 |

|

|

|

Invited Talk

Sanja Fidler (University of Toronto / NVIDIA) [Video] |

|

|

|

Invited Talk

Yoav Artzi (Cornell University) [Slides] [Video] |

|

|

|

Panel: Future Directions

[Video] |

|

|

|

Closing Remarks

Devi Parikh (Georgia Tech / Facebook AI Research) [Slides] [Video] |

Visual Question Answering and Dialog Workshop

Location: Seaside Ballroom B, Long Beach Convention & Entertainment Center

at CVPR 2019, June 17, Long Beach, California, USA

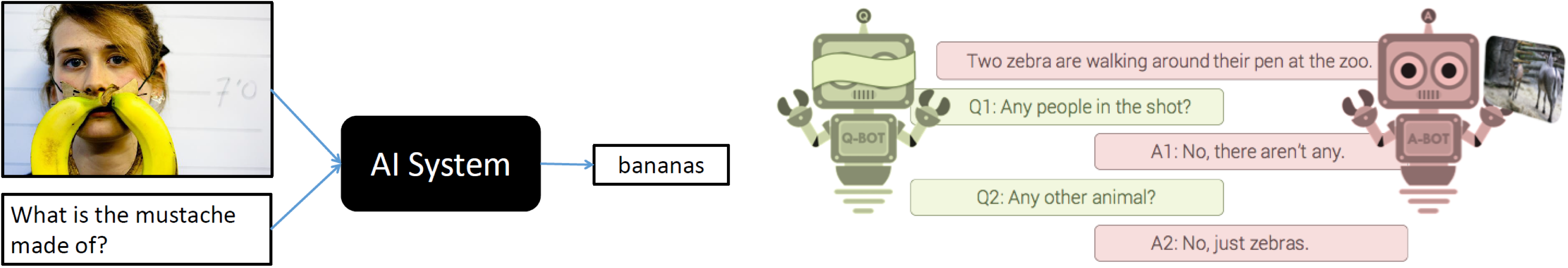

Introduction

The primary goal of this workshop is two-fold. First is to benchmark progress in Visual Question Answering and Visual Dialog.

-

Visual Question Answering

-

VQA 2.0: This track is the 4th challenge on the VQA v2.0 dataset introduced in Goyal et al., CVPR 2017. The 2nd and 3rd editions were organised at CVPR 2017 and CVPR 2018 on the VQA v2.0 dataset, and the 1st edition was organised at CVPR 2016 on the VQA v1.0 dataset introduced in Antol et al., ICCV 2015. VQA v2.0 is more balanced and reduces language biases over VQA v1.0, and is about twice the size of VQA v1.0.

Challenge link: https://visualqa.org/challenge

Evaluation Server: https://evalai.cloudcv.org/web/challenges/challenge-page/163/overview

Submission Deadline: May 10, 2019 23:59:59 GMT -

TextVQA: This track is the 1st challenge on the TextVQA dataset introduced in Singh et al., 2019. TextVQA requires algorithms to look at an image, read text in the image, reason about it, and answer a given question.

Challenge link: https://textvqa.org/challenge

Evaluation Server: https://evalai.cloudcv.org/web/challenges/challenge-page/244/overview

Submission Deadline: May 27, 2019 23:59:59 GMT [Extended] -

GQA: This track is the 1st challenge on the GQA dataset introduced in Hudson et al., 2019. GQA is a new dataset that focuses on real-world compositional reasoning. The dataset contains 20M image and question pairs, each of them comes with an underlying structured representation of their semantics. The dataset is complemented with a suite of new evaluation metrics to test consistency, validity and grounding. To know more about GQA, visit https://visualreasoning.org.

Challenge link: https://cs.stanford.edu/people/dorarad/gqa/challenge.html

Evaluation Server: https://evalai.cloudcv.org/web/challenges/challenge-page/225/overview

Submission Deadline: May 15, 2019 23:59:59 GMT -

The 2nd edition of the Visual Dialog Challenge will be hosted on the VisDial v1.0 dataset introduced in Das et al., CVPR 2017. The 1st edition of the Visual Dialog Challenge was organised on the VisDial v1.0 dataset at ECCV 2018. See leaderboard and analysis here. Visual Dialog requires an AI agent to hold a meaningful dialog with humans in natural, conversational language about visual content. Specifically, given an image, a dialog history (consisting of the image caption and a sequence of previous questions and answers), the agent has to answer a follow-up question in the dialog.

Challenge link: https://visualdialog.org/challenge

Evaluation Server: https://evalai.cloudcv.org/web/challenges/challenge-page/161/overview

Submission Deadline: May 18, 2019 23:59:59 GMT

There will be three tracks in the Visual Question Answering Challenge this year.

Visual Dialog

The second goal of this workshop is to continue to bring together researchers interested in visually-grounded question answering, dialog systems, and language in general to share state-of-the-art approaches, best practices, and future directions in multi-modal AI. In addition to invited talks from established researchers, we invite submissions of extended abstracts of at most 2 pages describing work in the relevant areas including: Visual Question Answering, Visual Dialog, (Textual) Question Answering, (Textual) Dialog Systems, Commonsense knowledge, Vision + Language, etc. All accepted abstracts will be presented as posters at the workshop to disseminate ideas. The workshop is on June 17th, 2019, at the IEEE Conference on Computer Vision and Pattern Recognition, 2019.

Prizes

The winning team of each track will receive Google Cloud Platform credits worth $10k!

Invited Speakers

Alex Schwing

University of Illinois at Urbana-Champaign

Lisa Hendricks

University of California, Berkeley

Yoav Artzi

Cornell University

Layla El Asri

Microsoft Research

Christopher Manning

Stanford University

Sanja Fidler

University of Toronto / NVIDIA

Karl Moritz Hermann

Google DeepMind

(Tentative) Program (Venue: Seaside Ballroom B, Convention Center)

Poster Presentation Instructions

The physical dimensions of the poster stands that will be available this year are 8 feet wide by 4 feet high. Please review the reference poster template for more details on how to prepare your poster. You do NOT have to use this template, but please read the instructions carefully and prepare your posters accordingly.

Submission Instructions

We invite submissions of extended abstracts of at most 2 pages describing work in areas such as: Visual Question Answering, Visual Dialog, (Textual) Question Answering, (Textual) Dialog Systems, Commonsense knowledge, Video Question Answering, Video Dialog, Vision + Language, and Vision + Language + Action (Embodied Agents). Accepted submissions will be presented as posters at the workshop. The extended abstract should follow the CVPR formatting guidelines and be emailed as a single PDF to the email id mentioned below. Please use the following LaTeX/Word templates.

-

Dual Submissions

We encourage submissions of relevant work that has been previously published, or is to be presented at the main conference. The accepted abstracts will not appear in the official IEEE proceedings.

Where to Submit?

Please send your abstracts to visualqa.workshop@gmail.com

Dates

Jan 2019

Challenge Announcement

mid-May 2019

Challenge Submission

May 24, 2019

Extended

Workshop Paper Submission

Jun 2, 2019

Notification to Authors

Jun 17, 2019

Workshop

Organizers

Vivek

Natarajan

Contact: visualqa@gmail.com