|

|

|

Welcome

Devi Parikh (Georgia Tech / Facebook AI Research) [Slides] |

|

|

|

Invited Talk

Jeffrey Bigham (Carnegie Mellon University) [Slides] |

|

|

|

Invited Talk

Adriana Kovashka (University of Pittsburgh) [Slides] |

|

|

|

Invited Talk

Jacob Andreas (Microsoft Research --> MIT) [Slides] |

|

|

|

Morning Break |

|

|

|

Invited Talk

Jitendra Malik (University of California, Berkeley & Facebook AI Research) [Slides] [Video] |

|

|

|

Overview of challenge, winner announcements, analysis of results

Yash Goyal (Georgia Tech) [Slides] [Video] |

|

|

|

Challenge Runner-Up Talk

Jin-Hwa Kim, Jaehyun Jun and Byoung-Tak Zhang [Slides] [Video] |

|

|

|

Challenge Runner-Up Talk

Zhou Yu, Jun Yu, Chenchao Xiang, Liang Wang, Dalu Guo, Qingming Huang, Jianping Fan and Dacheng Tao [Slides] [Video] |

|

|

|

Challenge Winner Talk

Yu Jiang*, Vivek Natarajan*, Xinlei Chen*, Marcus Rohrbach, Dhruv Batra and Devi Parikh [Slides] [Video] |

|

|

|

Lunch (On your own) |

|

|

|

Invited Talk

Ross Girshick (Facebook AI Research) [Slides] |

|

|

|

Invited Talk

Yejin Choi (University of Washigton & AI2) |

|

|

|

Invited Talk

Nasrin Mostafazadeh (Elemental Cognition) [Slides] |

|

|

|

Poster session and Afternoon break |

|

|

|

Invited Talk

Aaron Courville (University of Montreal) [Slides] |

|

|

|

Invited Talk

Peter Anderson (Australian National University) [Slides] |

|

|

|

Panel: Future Directions |

|

|

|

Closing Remarks

Dhruv Batra (Georgia Tech / Facebook AI Research) [Slides] |

VQA Challenge and Visual Dialog Workshop

Location: Room 155A, Calvin L. Rampton Salt Palace Convention Center

at CVPR 2018, June 18, Salt Lake City, Utah, USA

Home Program Submission Accepted Abstracts

Introduction

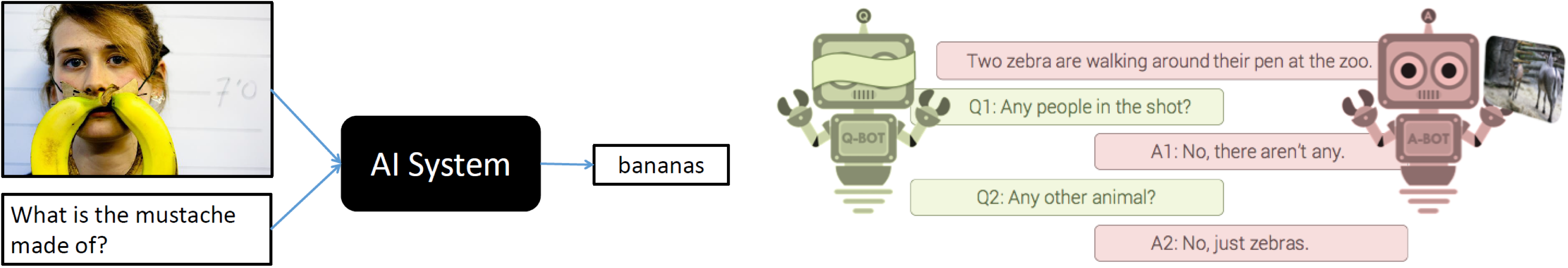

The primary purpose of this workshop is two-fold. First is to benchmark progress in Visual Question Answering by hosting the 3rd edition of the Visual Question Answering Challenge on the VQA v2.0 dataset introduced in Goyal et al., CVPR 2017. The 2nd edition of the VQA Challenge was organized at CVPR 2017 on VQA v2.0 dataset. The 1st edition of the VQA Challenge was organized at CVPR 2016 on the 1st edition (v1.0) of the VQA dataset introduced in Antol et al., ICCV 2015. VQA v2.0 is more balanced and reduces language biases over VQA v1.0, and is about twice the size of VQA v1.0.

Our idea in creating this new "balanced" VQA dataset is the following: For every (image, question, answer) triplet (I,Q,A) in the VQA v1.0 dataset, we identify an image I' that has an answer A' to Q such that A and A' are different. Both the old (I,Q,A) and the new (I',Q,A') triplets are present in the VQA v2.0 dataset balancing the VQA v1.0 dataset on a per question basis. Since I and I' are semantically similar, a VQA model will have to understand the subtle differences between I and I' to provide the right answer to both images. It cannot succeed as easily by making "guesses" based on the language alone. This workshop will provide an opportunity to benchmark algorithms on VQA v2.0 and to identify state-of-the-art algorithms that need to truly understand the image content in order to perform well on this balanced VQA dataset.

The second goal of this workshop is to continue to bring together researchers interested in visually grounded question answering and dialog systems to share state-of-the-art approaches, best practices, and future directions in multi-modal AI. In particular, this year, the workshop committee will extend the scope of invited talks, submitted papers, and poster presentations to include work on the related topic of Visual Dialog introduced by Das et al., CVPR 2017. The concrete task in Visual Dialog is the following -- given an image I, a history of a dialog consisting of a sequence of question-answer pairs (Q1: `How many people are in wheelchairs?', A1: `Two', Q2: `What are their genders?', A2: `One male and one female'), and a natural language follow-up question (Q3: `Which one is holding a racket?'), the task for the machine is to answer the question in free-form natural language (A3: `The woman'). In addition to VQA style reasoning, Visual Dialog requires rich language understanding including co-reference resolution and memory.

We invite submissions of extended abstracts of >= 2 pages describing work in relevant areas including: Visual Question Answering, Visual Dialog, (Textual) Question Answering, (Textual) Dialog Systems, Commonsense knowledge, Video Question Answering, Video Dialog, Vision + Language, and Vision + Language + Action (Embodied Agents). All accepted abstracts will be presented as posters at the workshop. The workshop is on June 18th, 2018 at the IEEE Conference on Computer Vision and Pattern Recognition, 2018.

Invited Speakers

Aaron Courville

University of Montreal

Adriana Kovashka

University of Pittsburgh

Jacob Andreas

Microsoft Research --> MIT

Jeffrey Bigham

Carnegie Mellon University

Jitendra Malik

University of California, Berkeley & Facebook AI Research

Nasrin Mostafazadeh

Elemental Cognition

Peter Anderson

Australian National University

Ross Girshick

Facebook AI Research

Yejin Choi

University of Washington & AI2

(Tentative) Program (Venue: 155A)

Poster Presentation Instructions

Poster stands will be 8 feet wide by 4 feet high. Please review the CVPR18 poster template for more details on how to prepare your poster. You do not need to use this template, but please read the instructions carefully and prepare your posters accordingly.

Submission Instructions

We invite submissions of extended abstracts of >= 2 pages describing work in areas such as: Visual Question Answering, Visual Dialog, (Textual) Question Answering, (Textual) Dialog Systems, Commonsense knowledge, Video Question Answering, Video Dialog, Vision + Language, and Vision + Language + Action (Embodied Agents). Accepted submissions will be presented as posters at the workshop. The extended abstract should follow the CVPR formatting guidelines and be emailed as a single PDF to the email id mentioned below. Please use the following LaTeX/Word templates.

Dual Submissions

We encourage submissions of relevant work that has been previously published, or is to be presented at the main conference. The accepted abstracts will not appear in the official IEEE proceedings.

Dates

May 25, 2018 Extended

Submission Deadline

May 28, 2018

Decision to Authors

If you missed the deadline and are interested in presenting at the workshop, please shoot us an email.

Organizers

Contact: visualqa@gmail.com